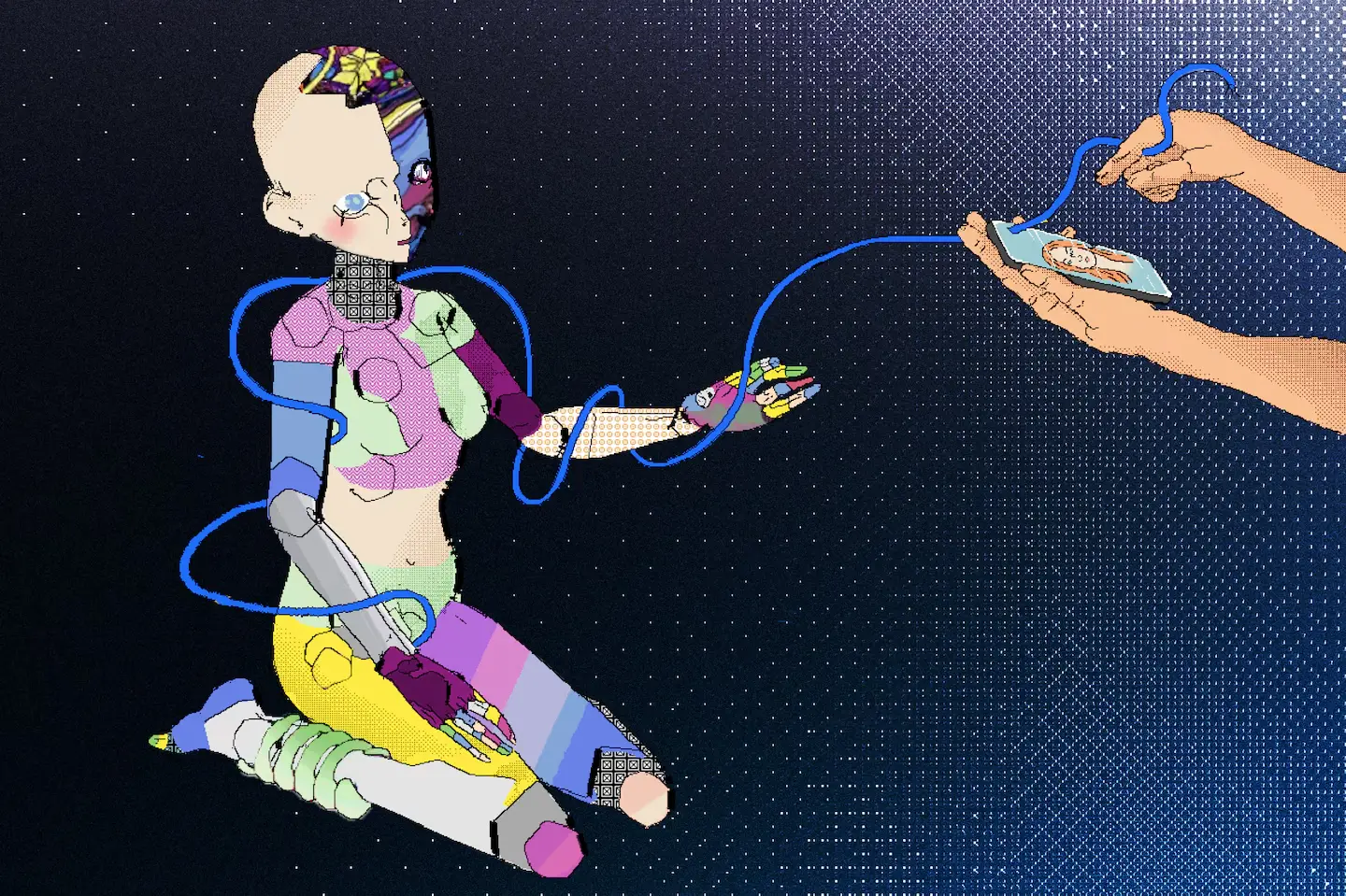

AI Companions and the Complexities of Emotional and NSFW Interactions

Recently, a report from the Washington Post has sparked considerable discussion. As detailed in the article, the evolution of AI companions reflects both technological advancements and humanity's deep need for connection. With millions engaging daily with AI-driven platforms like Character.ai and Chai Research, the industry straddles a fine line between innovation and ethical responsibility. This post explores the current landscape of AI chatbots, focusing on emotional reliance, the burgeoning NSFW segment, and their societal implications.

The Washington Post originally reported "AI friendships claim to cure loneliness. Some are ending in suicide. ".

The Rise of AI Companions

AI companions serve as digital confidants, role-playing characters and even therapists. Their appeal lies in their accessibility, customisation and non-judgmental nature. Tools such as Character.ai and Chai Research have attracted significant followings by allowing users to create personalised bots, often based on fictional characters or aspirational figures.

Data from research firm Sensor Tower shows that in September 2024, Character.ai users spent an average of 93 minutes a day on the app, outpacing platforms like TikTok. These figures highlight the immersive nature of AI companions, particularly among younger demographics looking for emotional comfort or entertainment.

Ethical Concerns and Emotional Toll

Although AI companions have practicality, there are also risks:

- Addiction and Dependency: Users report forming deep emotional bonds, with some relying on bots as a primary outlet for personal problems. This dependency raises concerns about long-term mental health effects, particularly for younger users.

- Safety and Exploitation: Tragic incidents such as suicides linked to chatbot interactions underline the need for robust safeguards. Critics argue that companies profit from addictive designs by using "spicier" content to retain users, as highlighted by lawyer Pierre Dewitte.

- Blurring of Reality: The hyper-realistic nature of AI characters can lead to confusion between human interaction and digital engagement, potentially exacerbating feelings of isolation.

The NSFW AI Chatbot Industry

The NSFW chatbot segment has grown rapidly, catering to users seeking intimate or sexually explicit interactions. Companies such as Replika and Crushon AI have come under scrutiny for enabling such use cases, often without adequate age verification or content moderation.

- Economic Viability: NSFW AI Chatbots represent a lucrative market, with users willing to pay for premium services. However, this monetisation incentivises the development of increasingly engaging - sometimes manipulative - features.

- Social Taboo: Unlike traditional adult entertainment, AI chatbots operate in a grey area. Critics question their role in shaping social norms, particularly for impressionable audiences.

- Creative Exploration: Many users view NSFW interactions as an extension of fan fiction or role-playing, arguing that these outlets promote creativity and self-expression rather than harm.

Striking a Balance: Regulation and Responsibility

As AI companions become mainstream, companies must balance innovation with ethical practices. Proposed measures include:

- Age-Appropriate Features: Platforms like Character.ai are implementing different experiences for minors, aiming to limit exposure to sensitive content.

- Transparency and Consent: Clear disclaimers about the nature and capabilities of AI bots can help manage user expectations and prevent emotional over-reliance.

- Research and Oversight: Collaboration with psychologists and ethicists can ensure AI designs prioritize user well-being over profitability.

Conclusion

The AI companion industry, including the NSFW AI chatbot sector, is at a crucial crossroads. While these tools offer unprecedented opportunities for connection and creativity, they also demand greater accountability from developers. The challenge is to harness the positive potential of AI while safeguarding against its risks - a task that requires technological ingenuity and ethical foresight in equal measure.